NNUE performance improvements

This document lists and describes performance improvements that are applicable to efficiently updatable neural networks in chess engines.

As a primer, I will quickly explicate the functioning of these networks. I will not cover the mathematical theory, which is better described by the author of the Bullet NNUE trainer, here. These networks invariably make use of float-to-integer quantisation of parameters, allowing floating-point calculations to be approximated in integer arithmetic, with two main benefits - first, integer arithmetic is somewhat faster than floating-point, and second, one can quantise from float32 down to int16 (or even int8), allowing for SIMD (Single-Instruction Multiple-Data) CPU instructions to operate on a larger number of neuron activations at once.

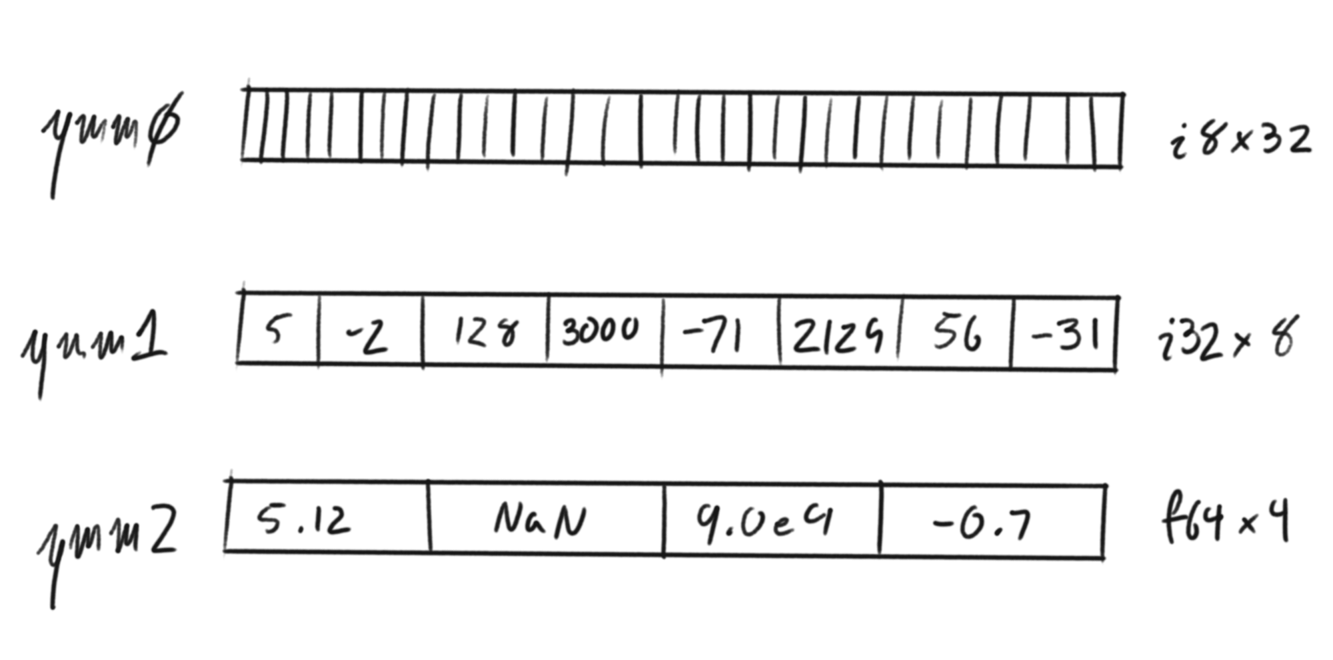

Representation of how values are packed into SIMD registers:

(image credit to McYoung, © 2024 Miguel Young de la Sota)

Important components of an NNUE system

The accumulator

The whole point of NNUE is to incrementally modify an internal state of neural activations - specifically, the activations of the very first layer. These incrementally-updated activations are stored in an array called an accumulator.

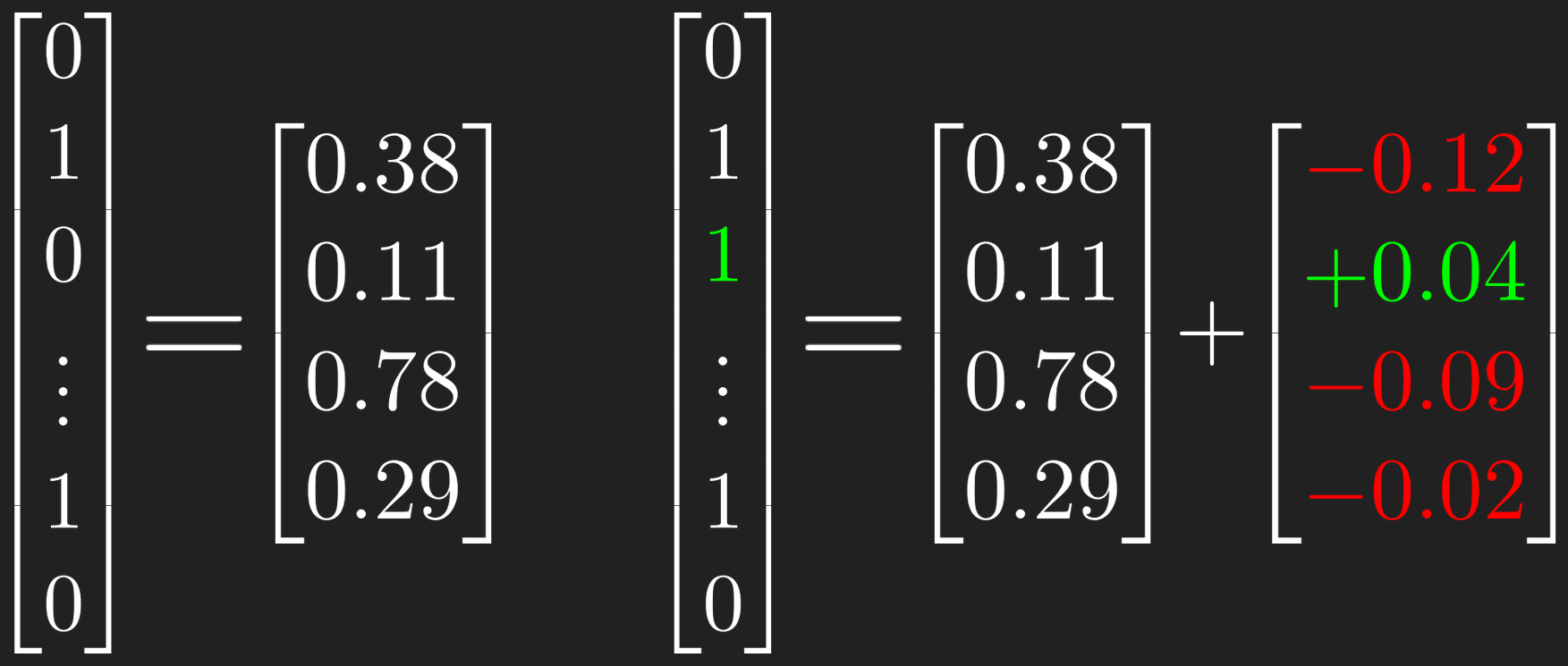

It is possible to maintain such a structure efficiently because the input features of NNUE are entirely binaryTechnically, this would work with trinary inputs of the form , too. ↩, and so whenever a feature changes we can simply add or subtract a component of the feature transformer matrix to generate a new accumulator from an old one.

We also avoid the need to do any activation when a feature changes, because the accumulator stores the pre-activation values of the neurons - if the output of a neuron is , then the accumulator stores the values that are the inputs to the activation function .

Hopefully this diagram should make it clearer as to what's going on:

And here's corresponding code for adding a certain feature to an accumulator state:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | |

So, for example, if you wanted to tell the network that a white knight has appeared on E3, then you'd run

1 2 | |

The output layers

The layers after the feature transformer operate on non-binary inputs, and as such cannot benefit from efficient updates. As such, we are forced to make these layers few and small, with most engines employing only one post-FT layer that simply calculates the evaluation directly from the activated accumulator.

Fused updates

Almost invariably, multiple feature updates are happening at once. There are three important cases:

- a quiet move where a piece moves from some square to another square will incur two feature updates, one add and one subtract.

- a capture involves three updates, two subtractions (captured piece and source square) and one addition (target square).

- castling involves four updates, for the source/target squares of the rook and king.

We can take advantage of this fact, and can avoid needless interstitial operations on the accumulator memory by operating on these features all at the same time. This manifests in functions that perform multiple additions and subtractions of feature vectors on the accumulator at the same time, often with unwieldy names like multiAddAddSubSub.

This looks essentially the same as the feature code shown before, but uses different memory for the old and new accumulators, as we maintain a stack of such accumulators to ease move make/unmake.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 | |

And here is code for dispatching to these various functions depending on the sort of update we're looking to apply:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 | |

Lazy updates

In some circumstances, it can be a waste to update the accumulator, as it will not actually end up being needed to evaluate a position. As such, it can be better to keep track simply of the features that have changed, and only to force accumulator materialisation when one gets to the point of actually calling evaluate().

Here's an example of code that manages the creation of new accumulators along the stack when an evaluation is actually needed:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 | |

Bucket Accumulator Caching, or, “Finny Tables”

Often, NNUE architectures make use of a technique called “king buckets”, or often colloquially just “buckets”. This refers to a technique where the weights of the feature transformer layer are dynamically switched depending on the location of the king on the board. This is explained in significant detail here.

As a result, when the king “changes bucket”, a total re-creation (or “refresh”) of the accumulator is required, as a totally different set of weights are being used to calculate the accumulator. As such, it might seem like we cannot do incremental updates - but we can! By maintaining a cache with N_BUCKETS slots, we can record previously seen positions that satisfy the king position for a certain bucket, alongside accumulators for such positions. Then, when the time comes that the search is exploring moves that change the king bucket, and requests an evaluation of a position with a changed feature transformer, we can look up the corresponding entry in this accumulator cache, compute a feature-difference between the cached position and the position that we are trying to evaluate, and incrementally update from that position, instead of recreating our accumulator ex nihilo.

Example code for probing the cache is given - note that this probing function also populates the cache, and works even if the cache is filled with null positions. As such, no store_accumulator_for_position function is required.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 | |

Lizard SIMD for squared clipped ReLU

Neural networks use activation functions. These tend to be simple differentiable nonlinear functions that allow the network to approximate complex functions. In our networks, we typically use the Rectified Linear Unit, ReLU, and some derivatives thereupon. Advanced deep learning models tend to use smooth ReLU-enhancements like GELU or GLU variants, but these are generally too costly to compute for us. A consideration imposed by quantisation is that we have a fixed max activation size before we suffer integer overflow - generally , i16::MAX. As such, we employ clipped ReLU, to ensure that we avoid overflows. A further enhancement is squared clipped ReLU (SCReLU), , which remains cheap to compute but provides valuable additional nonlinearity to the model, allowing our extremely shallow models to capture more of the dynamics of the data. Not all engine developers have found SCReLU an improvement over ReLU/CReLU, but many have seen strong results.

Squaring poses an additional problem over CReLU, that of integer overflow - if we quantise to, say, , which is the default of many trainers, then implementing squared clipped ReLU naively as clamp(x, 0, QA).pow(2) * w forces the activation-weight multiplication to occur in i32s, as , which is greater than i16::MAX.

A now-obsolete trick to mitigate this is to set , the largest possible value of for which is still less than i16::MAX, but this suffers significantly from the accuracy losses inherent in quantisation, and this becomes particularly painful in longer time controls, where quality of evaluation becomes relatively more important than speed.

The author of the Lizard chess engine invented a scheme that allows us the best of both worlds - large for high-accuracy evaluation, while still performing multiplication entirely in 16-bit.

The scheme is as follows:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 | |

We inject the weight-multiply after the clipped ReLU and before squaring, so we can fit all of our work inside 16-bit multiplies!

Important point: the output weight in this code is guaranteed to be in as a result of weight clipping to of . The Motor chess engine had success with narrowing the weight clipping bounds to and correspondingly increasing to .

Register juggling

When constructing an accumulator from the bucket-accumulator cache, we must do a reasonably large number of updates to an accumulator. The naive approach leads to needless loads/stores to the accumulator, when all subtractions and additions can be done piece-wise, only storing the full-updated values.

Consider the difference between

1 2 3 4 5 6 7 8 9 10 11 | |

and

1 2 3 4 5 6 7 8 9 10 11 12 13 | |

The first implementation does a load and a store per loop iteration, whereas the second implementation does one load and one store, total. Strictly, Rust's mutable-reference-uniqueness-guarantee should allow the first snippet to be optimised into the secondAs proof that rustc can in fact achieve this, see this godbolt example. ↩, but in NNUE inference we're typically working with unsafe calls directly to SIMD intrinsics where the compiler may perhaps be less able to slice through to see our intent.

As an attempt to make use of this optimisation, I made this modification to my in-place vector modification function, at the suggestion of Engine Programming user fireandice, author of Titan. Note that this version does a sort of hot-potato dance with multiple local variables all arranged in a vector, which has a number of elements that should fit entirely in SIMD registers. Making this work in a robust, architecture-generic fashion took a while, as shown in my results table:

| Configuration | Speed (% of master) |

|---|---|

master |

100.00% |

| single-intermediate variable | 95.70% |

| 8-register array | 94.84% |

| 16-register array | 94.57% |

| fireandice's verbatim code (not avx512-compatible) | 101.92% |

| 16-register array v2 (transposed) | 100.91% |

| 16-register array v3 (transposed, iteratorised, hoisted) | 100.74% |

| 16-register array v4 (transposed, iteratorised x2, hoisted, no-bounds-check) | 103.33% |

This approach of explicitly forcing the use of N registers works in other areas of NNUE inference, but my experiments with applying it to the fused update code resulted in a small (~0.6%) slowdown. The final code for accumulator construction after this optimisation is as follows:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 | |